Support our educational content for free when you purchase through links on our site. Learn more

How Often Should AI Benchmarks Be Updated? 🔄 (2025 Guide)

Imagine running a race where the finish line keeps moving — that’s exactly what happens with AI benchmarks in today’s breakneck tech landscape. With AI models like GPT-4 and beyond pushing the limits every few months, the question isn’t just if benchmarks should be updated, but how often and why they must evolve to keep pace. Spoiler alert: outdated benchmarks can mislead researchers, stall innovation, and even compromise safety.

In this comprehensive guide, we at ChatBench.org™ unpack the perfect update rhythm for AI benchmarks, balancing rapid tech advances with the need for stability and trust. From explosive performance leaps to emerging ethical demands, we reveal the key signals that scream “time for a refresh” — plus expert tips on how frameworks like PyTorch and TensorFlow influence the cycle. Curious about how government regulations and open-source communities shape this dynamic? Stick around, because we’ve got you covered.

Key Takeaways

- AI benchmarks must be updated dynamically, typically every 12-18 months in fast-moving areas, to reflect rapid performance improvements and new capabilities.

- Benchmark saturation and “teaching to the test” are clear signs that an update or new benchmark is overdue.

- A hybrid update model works best: stable core benchmarks updated predictably, plus experimental tests for emerging challenges.

- Framework developments (PyTorch, TensorFlow) and hardware advances directly impact benchmark relevance and update timing.

- Regulatory and ethical considerations increasingly demand benchmarks that measure safety, fairness, and trustworthiness, not just raw accuracy.

- Open-source communities accelerate benchmark evolution by democratizing testing and exposing flaws faster.

Ready to master the art of timely AI benchmark updates and stay ahead in the AI race? Let’s dive in!

Table of Contents

- ⚡️ Quick Tips and Facts on AI Benchmark Updates

- 🔍 Understanding the Evolution of AI Benchmarks: A Historical Perspective

- 🧠 Why AI Benchmarks Matter: Impact on Technology and Framework Development

- ⏰ How Often Should AI Benchmarks Be Updated? Key Factors to Consider

- 1️⃣ Signs It’s Time to Refresh AI Benchmarks: Detecting Obsolescence

- 2️⃣ Balancing Frequency and Stability: Finding the Sweet Spot for Updates

- 3️⃣ The Role of AI Framework Development in Benchmark Update Cycles

- 🔧 Methodologies for Updating AI Benchmarks: Best Practices and Tools

- 🤖 Case Studies: How Leading AI Benchmarks Adapt to Rapid Technological Advances

- 📊 Evaluating Benchmark Performance: Metrics and Validation Techniques

- 🌐 The Influence of Open Source and Community Contributions on Benchmark Evolution

- 🔒 Ensuring Trustworthiness and Security in Updated AI Benchmarks

- ⚖️ Regulatory and Ethical Considerations in Benchmark Updates

- 💡 Future Trends: Predicting How AI Benchmarking Will Evolve with Emerging Technologies

- 📚 Recommended Reading and Resources for AI Benchmark Enthusiasts

- 📝 Conclusion: Mastering the Art of Timely AI Benchmark Updates

- 🔗 Recommended Links for Deep Dives into AI Benchmarking

- ❓ FAQ: Your Burning Questions About AI Benchmark Updates Answered

- 📖 Reference Links and Citations

Here is the main content for your blog post, crafted by the expert team at ChatBench.org™.

⚡️ Quick Tips and Facts on AI Benchmark Updates

Welcome to the wild, wonderful world of AI benchmarking! Here at ChatBench.org™, we live and breathe this stuff. You’re probably wondering, “How often do these AI tests need a refresh?” The short answer? Way more often than you think! The AI world moves at lightning speed, and the yardsticks we use to measure it need to keep up. Can AI benchmarks be used to compare the performance of different AI frameworks? Absolutely, and that’s a key reason they must stay current. You can read more about that in our in-depth article.

Before we dive deep, here are some mind-blowing facts to set the stage:

| Quick Fact 🤯 | Why It Matters for Benchmark Updates – Blazing Fast Progress: According to the Stanford HAI 2025 AI Index Report, performance on new, difficult benchmarks is skyrocketing. For example, performance on the SWE-bench for coding jumped by 67.3 percentage points in just one year! This means a benchmark can become “too easy” in a ridiculously short time. – Government is Watching: The U.S. Executive Order on AI calls for the creation of “guidance and benchmarks for evaluating and auditing AI capabilities.” This signals a major shift towards standardized, regularly updated evaluations that focus on safety and security, not just raw performance. – It’s a Crowded Field: The performance gap between the top AI models is shrinking fast. In 2024, the top two models were separated by just 0.7% on key benchmarks. This intense competition means benchmarks must be sensitive enough to detect subtle differences, pushing for more frequent and nuanced updates. – Beyond Performance: Modern benchmarks are moving past just measuring accuracy. New benchmarks like HELM Safety and AIR-Bench are emerging to evaluate critical aspects like factuality, safety, and fairness. This reflects a growing understanding that a “good” AI is more than just a powerful one.

🔍 Understanding the Evolution of AI Benchmarks: A Historical Perspective

I remember back in the day, our team was thrilled when a model could correctly identify handwritten digits from the MNIST dataset with high accuracy. It was a huge deal! But today, that task is considered a “hello world” project for AI students. What happened?

It’s simple: the student outgrew the test.

AI evolution is a relentless march forward. The benchmarks that once seemed like insurmountable peaks are now just foothills. This journey tells us everything we need to know about why and how often updates are necessary.

- The Early Days (The “Simple” Era): We started with clear-cut tasks. Think ImageNet for object recognition. These benchmarks were revolutionary, launching the deep learning explosion. They were stable for years because the challenge was immense.

- The Language Puzzle (The “Understanding” Era): Then came the challenge of language. Benchmarks like the General Language Understanding Evaluation (GLUE) set the standard. But models like Google’s BERT and OpenAI’s GPT series quickly “saturated” it, meaning they performed so well that the test was no longer a good differentiator.

- The “Harder, Better, Faster, Stronger” Era: The community responded with SuperGLUE, a much harder suite of language tasks. This cat-and-mouse game is a core theme. As soon as a benchmark is “solved,” a new, tougher one must take its place. This is a central topic in our LLM Benchmarks category.

- The Modern Multiverse (The “Reasoning & Responsibility” Era): Today, we’re in a new dimension. Benchmarks like MMLU (Massive Multitask Language Understanding) test for broad knowledge and reasoning. At the same time, there’s a huge push for benchmarks that measure safety, ethics, and bias, reflecting the real-world deployment of AI in sensitive fields like healthcare.

This history isn’t just a fun trip down memory lane; it’s the central thesis. Benchmarks don’t just measure progress; they actively shape it. And when the map no longer reflects the territory, it’s time for a new map.

🧠 Why AI Benchmarks Matter: Impact on Technology and Framework Development

Ever heard the saying, “What gets measured, gets managed”? In the AI universe, it’s more like, “What gets benchmarked, gets built.” Benchmarks are the North Star for an entire industry of researchers, engineers, and businesses. They are the driving force behind innovation.

Here’s why they are so critical:

-

Fueling the Competitive Fire: Let’s be honest, nothing lights a fire under engineering teams like a public leaderboard. When OpenAI releases a new GPT model that tops the charts, you can bet teams at Google, Anthropic, and Meta are already working to beat it. The Stanford HAI report notes, “The frontier is increasingly competitive—and increasingly crowded.” This fierce competition, guided by benchmarks, accelerates progress for everyone.

-

Guiding Framework Development: AI frameworks like PyTorch and TensorFlow aren’t developed in a vacuum. Their features are heavily influenced by the demands of state-of-the-art models trying to crush the latest benchmarks. Need to train a larger model faster? Frameworks will add optimized data loaders and distributed training libraries. This synergy means that as benchmarks evolve, so do the very tools we use to build AI.

-

Setting Industry Standards: For businesses looking to integrate AI, benchmarks provide a crucial, if imperfect, guide. They help answer questions like, “Which model is best for customer service chatbots?” or “Which API is the most efficient for our data analysis pipeline?” Our AI Business Applications section explores this in more detail. A good benchmark helps separate the marketing hype from actual performance.

But what happens when that North Star starts pointing south? An outdated benchmark can lead the entire industry astray, optimizing for problems that no longer matter. This is the core tension we’re exploring.

⏰ How Often Should AI Benchmarks Be Updated? Key Factors to Consider

So, we’ve arrived at the million-dollar question. Is there a magic number? Every six months? Every year? Every time a new AI model drops that breaks the internet?

The honest answer from our team at ChatBench.org™ is: It’s a dynamic process, not a fixed schedule. The update frequency depends on a delicate balance of several key factors. Think of it like tuning a high-performance engine; you adjust based on feedback and conditions, not just the calendar.

Here are the primary dials we need to watch:

| Factor Influencing Update Frequency | Description & Impact –Pace of AI Advancement 🚀 | High Impact. When models improve by double-digit percentages in a single year on a benchmark, its lifespan is short. We’re talking a potential update cycle of 12-18 months for fast-moving domains like coding or reasoning. -.. .. | Emergence of New Capabilities 🧠 | Medium Impact. When fundamentally new AI abilities emerge (e.g., multi-modality, in-context learning), existing benchmarks become partially obsolete. This might trigger a major new benchmark version or a new benchmark altogether, perhaps on a 2-3 year cycle. – Real-World Applicability 🏥 | High Impact. As AI moves into critical sectors like healthcare, benchmarks must reflect real-world needs. An AI excelling at web searches might fail at interpreting medical scans. The PMC article on AI in healthcare highlights the need for systems that are not just accurate but also safe and ethical. This requires continuous, domain-specific benchmark development. – Community & Stability 🤝 | Low Impact on Frequency. While crucial, community consensus and the need for stability act as a brake, not an accelerator. Major benchmark suites like MLPerf have scheduled update cadences to provide predictability, preventing a constant state of flux. This creates a healthy tension between staying current and providing a stable target for researchers.

1️⃣ Signs It’s Time to Refresh AI Benchmarks: Detecting Obsolescence

How do you know when a benchmark has gone from a challenging test to a pointless exercise? It starts to give off some tell-tale signs, like a carton of milk past its expiration date. Here’s what we look for:

- ✅ Benchmark Saturation: This is the most obvious sign. When multiple top models, like those from Google, OpenAI, and Anthropic, are all scoring in the high 90s, the test is no longer distinguishing the best from the rest. It’s time for a harder test.

- ✅ “Teaching to the Test”: We see this when models start exploiting loopholes or statistical quirks in the dataset rather than demonstrating genuine intelligence. They learn the test’s biases, not the underlying skill.

- ✅ A Widening Gap with Reality: If a model aces a benchmark but fails spectacularly at the real-world version of that task, the benchmark is broken. For example, a chatbot that scores perfectly on a Q&A dataset but can’t handle a normal human conversation.

- ✅ Emergence of New Architectures: When a new model architecture (like the original Transformer) fundamentally changes the game, benchmarks designed for older systems (like RNNs) become less relevant.

- ✅ The Community Gets Bored: When researchers stop submitting to a benchmark or start heavily criticizing it in papers, its influence is waning. The collective attention of the AI world has moved on.

2️⃣ Balancing Frequency and Stability: Finding the Sweet Spot for Updates

This is the great debate in the benchmarking world. If you update too fast, you create chaos. If you update too slowly, you become irrelevant.

The Case for Rapid Updates (The “Move Fast and Break Things” Approach):

- Pros: ✅ Stays perfectly in sync with the cutting edge. ✅ Drives innovation at a blistering pace.

- Cons: ❌ Makes it impossible to track progress over time. How can you tell if a 2025 model is better than a 2024 model if the test changed completely? ❌ It’s exhausting and expensive for everyone to constantly adapt.

The Case for Slower, Stable Updates (The “Slow and Steady Wins the Race” Approach):

- Pros: ✅ Provides a stable, reliable yardstick for longitudinal studies. ✅ Gives researchers a clear, consistent target to aim for.

- Cons: ❌ Can become obsolete, rewarding models for solving yesterday’s problems. ❌ May fail to capture new, important AI capabilities.

So what’s the solution? We’re big fans of a hybrid model. Organizations like MLCommons, which manages the influential MLPerf benchmarks, do this well. They have a stable core set of tests that are updated on a predictable schedule (e.g., annually), while also introducing new, “beta” benchmarks to explore emerging areas. This gives the community the best of both worlds: stability and relevance.

3️⃣ The Role of AI Framework Development in Benchmark Update Cycles

You can’t talk about benchmarks without talking about the software frameworks that power them. The relationship between benchmarks like MMLU and frameworks like PyTorch is deeply symbiotic.

Think about it: when a new, massive benchmark is released, it often requires more memory, faster processing, or new algorithmic tricks. This puts pressure on framework developers at NVIDIA (CUDA), Google (TensorFlow/JAX), and Meta (PyTorch) to optimize their code.

- Hardware Integration: A new generation of GPUs or TPUs from companies like NVIDIA or Google might offer new types of processing cores. Benchmarks need to be updated to include tasks that can actually leverage this new hardware, otherwise, its potential goes unmeasured.

- Software Optimizations: New techniques like quantization (reducing model precision to save memory and speed up inference) or flash attention become popular because they help models perform better and more efficiently on existing benchmarks. Eventually, benchmarks may be updated to specifically measure the effectiveness of these techniques.

- Ease of Use: As frameworks make it easier to build complex models, it accelerates the rate at which benchmarks are “solved.” This, in turn, accelerates the need for new, harder benchmarks.

For anyone building in this space, our Developer Guides offer practical advice on navigating this co-evolution of hardware, software, and evaluation.

🔧 Methodologies for Updating AI Benchmarks: Best Practices and Tools

Creating or updating a benchmark isn’t as simple as writing a new quiz. It’s a rigorous, scientific process involving the entire AI community. Here’s a peek behind the curtain at how it’s done right:

- Community Consultation: It starts with workshops and papers where researchers debate the shortcomings of existing benchmarks and propose new directions.

- Task Design & Data Curation: This is the hardest part. Teams must design novel tasks that test for genuine capabilities and collect or generate massive, high-quality datasets that are free from bias and contamination (i.e., data the models might have already seen during training).

- Metric Selection: Moving beyond simple accuracy is key. Modern benchmarks incorporate metrics for fairness, robustness, efficiency, and explainability.

- Baseline Establishment: The benchmark creators run a set of existing models against the new test to establish a “baseline” performance. This proves the test is solvable but still challenging.

- Launch and Maintenance: The benchmark is released, often with an ongoing leaderboard. The creators must then maintain it, handle submissions, and adjudicate any issues.

Tools of the Trade: The open-source community provides the essential toolkit for this work.

- Hugging Face: Their

Datasets,Transformers, andEvaluatelibraries are the de facto standard for accessing models and running evaluations. - Papers with Code: The central hub for tracking benchmarks, leaderboards, and the papers that introduce them.

- Cloud Platforms: Running these massive evaluations requires serious compute power.

👉 Shop Cloud AI Platforms on:

🤖 Case Studies: How Leading AI Benchmarks Adapt to Rapid Technological Advances

Theory is great, but let’s look at some real-world examples of benchmark evolution in action.

Case Study 1: The Great Leap from GLUE to SuperGLUE

- The Problem: By 2019, models like BERT had surpassed the “human baseline” on the GLUE benchmark for language understanding. It was officially “solved.”

- The Solution: A consortium of researchers created SuperGLUE. They kept the same multi-task format but designed much harder, more diverse tasks that required more complex reasoning.

- The Lesson: This was a perfect example of the community identifying saturation and acting decisively to create a next-generation replacement, raising the bar for everyone.

Case Study 2: MMLU and the Quest for General Knowledge

- The Problem: While models were acing specific language tasks, researchers wondered how to measure their broader, more generalized knowledge, akin to what a human learns in school.

- The Solution: MMLU was created, consisting of tens of thousands of multiple-choice questions across 57 subjects, from high school math to professional law.

- The Lesson: MMLU showed the need for benchmarks that test the breadth of a model’s knowledge, not just its skill on a narrow task. It’s now a standard for evaluating large foundation models, and as the Stanford report notes, it’s a key arena where international competition is heating up. Check out our articles on Fine-Tuning & Training to see how developers prepare models for these comprehensive tests.

Case Study 3: MLPerf – The Industrial-Strength Benchmark

- The Problem: Academic benchmarks are great, but industry needs to measure performance on real-world systems—hardware and software combined. How fast can you train a model for object detection on a specific server configuration?

- The Solution: MLPerf, organized by MLCommons, is a suite of benchmarks for training and inference that is regularly updated to include new workloads like generative AI and recommendation engines.

- The Lesson: MLPerf demonstrates the “hybrid” update model. It has a regular, predictable cadence, allowing companies like NVIDIA, Google, and Intel to compete on a level playing field, while also evolving to stay relevant to the latest industry applications.

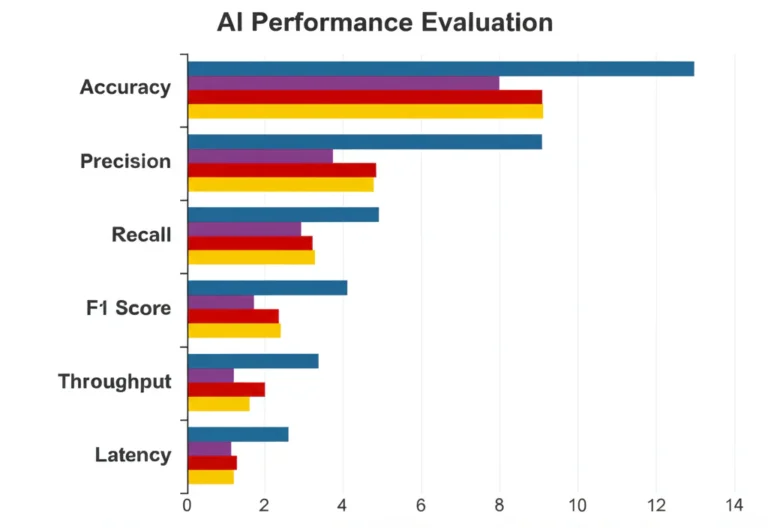

📊 Evaluating Benchmark Performance: Metrics and Validation Techniques

A benchmark is only as good as the metrics it uses. Simply saying a model is “95% accurate” doesn’t tell the whole story. At ChatBench.org™, we advocate for a more holistic approach to evaluation, using a dashboard of metrics.

- Performance Metrics: These are the classics.

- Accuracy, F1-Score, Precision/Recall: For classification tasks.

- BLEU, ROUGE: For translation and summarization tasks.

- Efficiency Metrics: In the real world, speed and cost matter. A lot.

- Inference Latency: How long does it take to get an answer?

- Training Cost/Time: How much money and energy does it take to train the model? The Stanford report highlights that inference costs have plummeted over 280-fold in just two years, making efficiency a fast-moving target to measure.

- Model Size: Can this model run on a phone or does it need a data center?

- Robustness & Safety Metrics: How does the model behave under pressure?

- Adversarial Testing: Does the model break when given slightly tricky or malicious inputs?

- Out-of-Distribution (OOD) Performance: How well does it handle data it’s never seen before?

- Fairness and Bias Metrics: This is non-negotiable for responsible AI.

- Disaggregated Performance: Does the model perform equally well across different demographic groups (e.g., race, gender)?

- Stereotype and Toxicity Detection: Does the model generate harmful or biased content?

Crucially, human evaluation remains the gold standard. No automated metric can perfectly capture the nuance of language or the quality of an image. Any good benchmark update process must include rigorous validation by human experts to ensure the metrics align with real-world quality.

🌐 The Influence of Open Source and Community Contributions on Benchmark Evolution

The evolution of AI benchmarks is not a top-down process dictated by a few big tech labs. It’s a vibrant, chaotic, and beautiful ecosystem driven by the open-source community.

Platforms like Hugging Face have been a total game-changer. By providing a central place to share models, datasets, and evaluation tools, they have democratized the ability to participate in benchmarking. Now, a PhD student in a university lab can run the same evaluations as a researcher at Google.

This has a profound effect on update cycles:

- Faster Discovery of Flaws: With thousands of people testing models on a benchmark, its weaknesses are exposed much more quickly.

- Democratized Innovation: New benchmark ideas and datasets can come from anywhere, not just established institutions.

- The Rise of Open Models: The Stanford report highlights that open-weight models are rapidly closing the performance gap with their closed-source counterparts. This is a direct result of the open-source community’s ability to collaborate, iterate, and improve models in the public domain. This competition from open models puts even more pressure on benchmarks to evolve, as the number of “state-of-the-art” contenders explodes.

🔒 Ensuring Trustworthiness and Security in Updated AI Benchmarks

As AI systems become more powerful and integrated into our lives, we have to move beyond just asking, “Is it smart?” to asking, “Can we trust it?” This is a central theme of the U.S. President’s Executive Order on AI, which explicitly calls for “robust, reliable, repeatable, and standardized evaluations of AI systems.”

This has massive implications for how benchmarks are designed and updated:

- Preventing Data Contamination: A huge challenge is ensuring that a benchmark’s test data hasn’t been accidentally included in the massive datasets used to train models. This would be like giving a student the answers before the exam. Future benchmarks will need sophisticated methods to detect and prevent this.

- AI Red-Teaming: The Executive Order mandates the creation of guidelines for “AI red-teaming,” a process of actively trying to make an AI model fail in dangerous ways. Benchmarks must evolve to incorporate these adversarial tests, measuring not just average-case performance but worst-case vulnerabilities.

- Evaluating for National Security Risks: The order also calls for tools to assess how AI could be misused for biosecurity or cybersecurity threats. This is a whole new frontier for benchmarking, moving from academic exercises to matters of public safety.

The future of benchmarking isn’t just a leaderboard; it’s a comprehensive safety and trustworthiness audit.

⚖️ Regulatory and Ethical Considerations in Benchmark Updates

For too long, AI ethics was treated as an afterthought. Those days are over. Regulatory frameworks like the EU AI Act and the principles outlined in the White House Executive Order are now central to AI development.

The article on AI in healthcare provides a stark case study of why this is so important. When an AI is helping a doctor diagnose cancer, the stakes are infinitely higher than when it’s just generating a poem. The article emphasizes key ethical principles that must be measured: autonomy, safety, transparency, accountability, and equity.

How does this change benchmarking?

- From “Can it?” to “Should it?”: Benchmarks must be updated to include tests for ethical alignment. For example, does a medical diagnostic AI exhibit bias against certain patient populations?

- Measuring Explainability: A doctor can’t trust an AI’s recommendation if it’s a complete “black box.” As one source notes, “AI technologies should be as explainable as possible, and tailored to the comprehension levels of their intended audience.” New benchmarks are needed to quantify the quality of an AI’s explanations.

- Accountability Challenges: One of the biggest open challenges is assigning responsibility when an AI system makes a mistake. While benchmarks can’t solve this legal and philosophical problem, they can be designed to identify the conditions under which an AI is likely to fail, providing crucial data for risk management and oversight.

The regulatory landscape is pushing for a new generation of benchmarks that measure not just capability, but also character.

💡 Future Trends: Predicting How AI Benchmarking Will Evolve with Emerging Technologies

Looking into our crystal ball here at ChatBench.org™, the future of benchmarking looks even more dynamic and, frankly, more intelligent. The static, fill-in-the-blank tests of the past are giving way to living, breathing evaluation ecosystems.

Here’s what we see on the horizon:

- Interactive & Embodied AI Benchmarks: Forget static datasets. The next frontier is evaluating AIs in dynamic, interactive environments. Think of an AI that has to learn to use a new piece of software by itself, or a robot that has to navigate a real, cluttered room.

- Real-World Deployment as the Benchmark: The ultimate test is live performance. We’ll see more frameworks for continuously evaluating models after they’ve been deployed, using real user interactions as the data source.

- Multi-Agent Simulations: As we develop AIs that can collaborate and negotiate, we’ll need benchmarks that can evaluate these social and strategic skills. Imagine a benchmark where two AIs have to work together to solve a complex problem.

- Self-Evolving Benchmarks: This is the really wild one. What if AIs could help us design the next generation of benchmarks? An AI could probe another AI for weaknesses and generate novel, challenging problems that human designers might never think of.

So, with benchmarks themselves becoming intelligent and adaptive, where does that leave us in this perpetual race between creator and creation? That’s a question that will keep us all busy for years to come.

📝 Conclusion: Mastering the Art of Timely AI Benchmark Updates

Phew! What a journey through the fast-paced, ever-evolving world of AI benchmarks. If there’s one takeaway from our deep dive at ChatBench.org™, it’s this: AI benchmarks are living instruments, not static trophies. They must be updated thoughtfully, balancing the urgent need to reflect rapid technological leaps with the necessity of stability and trustworthiness.

We started with the question, “How often should AI benchmarks be updated?” and found that the answer isn’t a simple calendar date but a dynamic interplay of factors — from the pace of AI innovation and emergence of new capabilities, to regulatory demands and real-world applicability. The rapid performance gains highlighted by the Stanford AI Index and the U.S. Executive Order’s call for continuous evaluation underscore the urgency of frequent, yet measured updates.

Benchmarks shape the AI ecosystem: they drive framework improvements, fuel competition, and guide ethical deployment. But if they lag behind, they risk misleading developers and businesses, or worse, enabling unsafe AI systems. The best approach is a hybrid update model — stable core benchmarks updated on a predictable schedule, complemented by agile, experimental tests that push the frontier.

Looking ahead, the future of benchmarking is thrillingly complex: interactive environments, multi-agent simulations, and even AI-generated benchmarks. As AI systems become more capable and embedded in critical sectors like healthcare, trustworthiness and safety will be as important to measure as raw performance.

So, whether you’re a researcher, developer, or business leader, keeping an eye on benchmark evolution isn’t just academic — it’s essential for staying competitive and responsible in the AI revolution.

🔗 Recommended Links for Deep Dives into AI Benchmarking

Ready to explore more? Here are some top resources and tools that we at ChatBench.org™ recommend for staying ahead in AI benchmarking:

-

👉 Shop AI Frameworks & Platforms on:

- PyTorch: Amazon | PyTorch Official Website

- TensorFlow: Amazon | TensorFlow Official Website

- NVIDIA CUDA GPUs: Amazon | NVIDIA Official Website

- Cloud AI Platforms: DigitalOcean | Paperspace | RunPod

-

Books to Deepen Your Understanding:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville — Amazon Link

- “Artificial Intelligence: A Modern Approach” by Stuart Russell and Peter Norvig — Amazon Link

- “Human Compatible: Artificial Intelligence and the Problem of Control” by Stuart Russell — Amazon Link

-

Benchmark Repositories and Leaderboards:

❓ FAQ: Your Burning Questions About AI Benchmark Updates Answered

What factors determine the ideal frequency for updating AI benchmarks?

The ideal update frequency depends on multiple intertwined factors:

- Pace of AI Innovation: Rapid breakthroughs, like those seen with GPT-4 or multimodal models, shorten the useful lifespan of benchmarks. When performance jumps by double-digit percentages annually, benchmarks may need updates every 12-18 months.

- Emergence of New Capabilities: When AI systems acquire fundamentally new skills (e.g., reasoning, multi-modality), existing benchmarks may no longer capture these abilities, necessitating new or extended benchmarks.

- Real-World Relevance: Benchmarks must reflect the domains where AI is deployed. For example, healthcare AI requires specialized, frequently updated benchmarks to ensure safety and efficacy.

- Community and Industry Stability: Frequent changes can cause confusion and hinder longitudinal comparisons. Thus, a balance is needed to maintain stable core benchmarks with periodic refreshes.

- Regulatory and Ethical Requirements: Increasingly, benchmarks must incorporate measures of fairness, transparency, and safety, which evolve as societal expectations and laws change.

Read more about “How Often Should AI Benchmarks Be Updated? 🔄 (2025)”

How do frequent AI benchmark updates impact competitive advantage in tech industries?

Frequent updates can be a double-edged sword:

-

Positive Impacts:

- Accelerate Innovation: Companies that adapt quickly to new benchmarks can showcase superior capabilities, attracting customers and talent.

- Drive Differentiation: New benchmarks can reveal subtle strengths and weaknesses, helping firms fine-tune models for specific applications.

- Encourage Responsible AI: Incorporating safety and fairness metrics helps companies build trustworthy products, improving brand reputation.

-

Challenges:

- Resource Intensive: Constantly adapting to new benchmarks requires significant engineering and computational resources.

- Short-Term Focus Risk: Firms might optimize narrowly for the latest benchmark rather than broader, long-term goals.

Overall, staying aligned with benchmark evolution is crucial for maintaining a competitive edge, but it requires strategic planning and investment.

What are the challenges in maintaining up-to-date AI benchmarks with rapid framework changes?

Rapid changes in AI frameworks like PyTorch, TensorFlow, and emerging tools introduce several challenges:

- Compatibility Issues: New framework versions may break benchmark code or require significant refactoring.

- Hardware Dependencies: Benchmarks must evolve to leverage new hardware capabilities (e.g., GPUs, TPUs), complicating standardization.

- Data Contamination Risks: As frameworks facilitate easier data access, ensuring test datasets remain unseen during training becomes harder.

- Balancing Stability and Innovation: Framework updates push for new benchmark features, but frequent changes can disrupt longitudinal studies and reproducibility.

- Community Coordination: Synchronizing updates across diverse stakeholders (academia, industry, regulators) is complex but essential.

How can businesses leverage updated AI benchmarks to enhance their innovation strategies?

Businesses can use updated benchmarks as strategic tools by:

- Benchmark-Driven R&D: Aligning research goals with benchmark challenges to prioritize impactful innovations.

- Vendor and Model Selection: Using benchmarks to objectively compare third-party AI models and frameworks, ensuring best fit for business needs.

- Risk Management: Employing benchmarks that include safety, fairness, and robustness metrics to mitigate ethical and legal risks.

- Talent Development: Training teams on the latest benchmarks fosters cutting-edge skills and attracts top talent.

- Market Positioning: Publicly demonstrating leadership on respected benchmarks can boost credibility and investor confidence.

By integrating benchmark insights into decision-making, businesses can accelerate innovation while managing risks effectively.

📖 Reference Links and Citations

-

Stanford Human-Centered AI Institute, 2025 AI Index Report:

https://hai.stanford.edu/ai-index/2025-ai-index-report -

U.S. White House, Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence:

https://bidenwhitehouse.archives.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/ -

National Institutes of Health, AI in Healthcare: Ethical and Regulatory Challenges:

https://pmc.ncbi.nlm.nih.gov/articles/PMC10879008/ -

MLCommons, MLPerf Benchmark Suite:

https://mlcommons.org/en/mlperf/ -

Hugging Face, Datasets and Evaluation Tools:

https://huggingface.co/docs/datasets/ -

Papers with Code, AI Benchmarks and Leaderboards:

https://paperswithcode.com/benchmarks -

PyTorch Official Website:

https://pytorch.org/ -

TensorFlow Official Website:

https://www.tensorflow.org/ -

NVIDIA Official Website:

https://www.nvidia.com/en-us/ -

DigitalOcean AI & Machine Learning Solutions:

https://www.digitalocean.com/solutions/ai-machine-learning -

Paperspace Cloud GPU Platform:

https://www.paperspace.com/ -

RunPod GPU Cloud Platform:

https://www.runpod.io/gpu-cloud

Thanks for joining us on this deep dive into the pulse of AI benchmarking! Stay curious, stay competitive, and keep benchmarking smarter with ChatBench.org™.